Current Research Projects

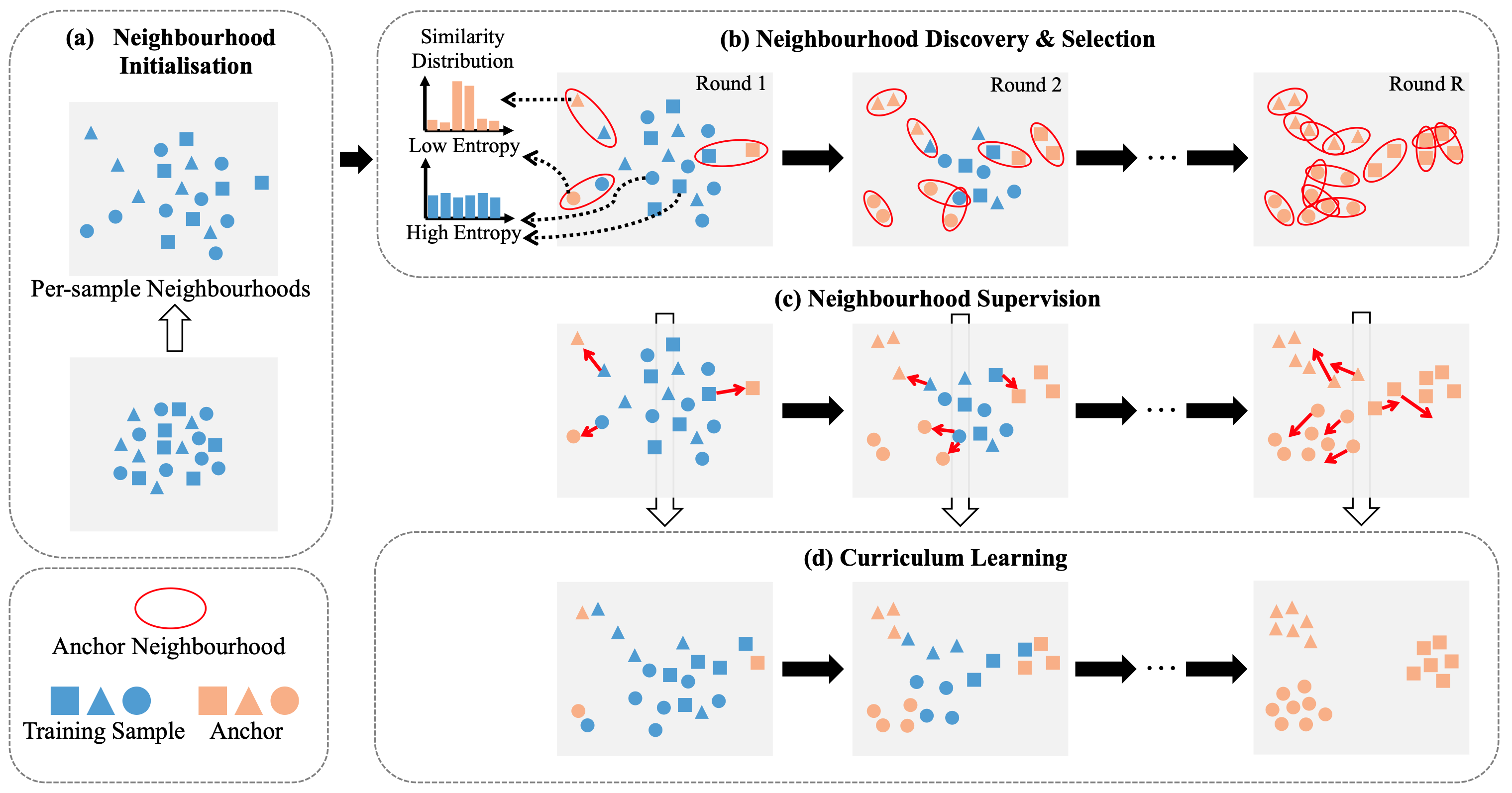

Unsupervised Deep Learning

Deep convolutional neural networks (CNNs) have demonstrated remarkable success in computer vision by supervisedly learning strong visual feature representations. However, training CNNs relies heavily on the availability of exhaustive training data annotations, limiting significantly their deployment and scalability in many application scenarios. In this project, we aim for generic unsupervised deep learning approaches to training deep models without the need for any manual label supervision.

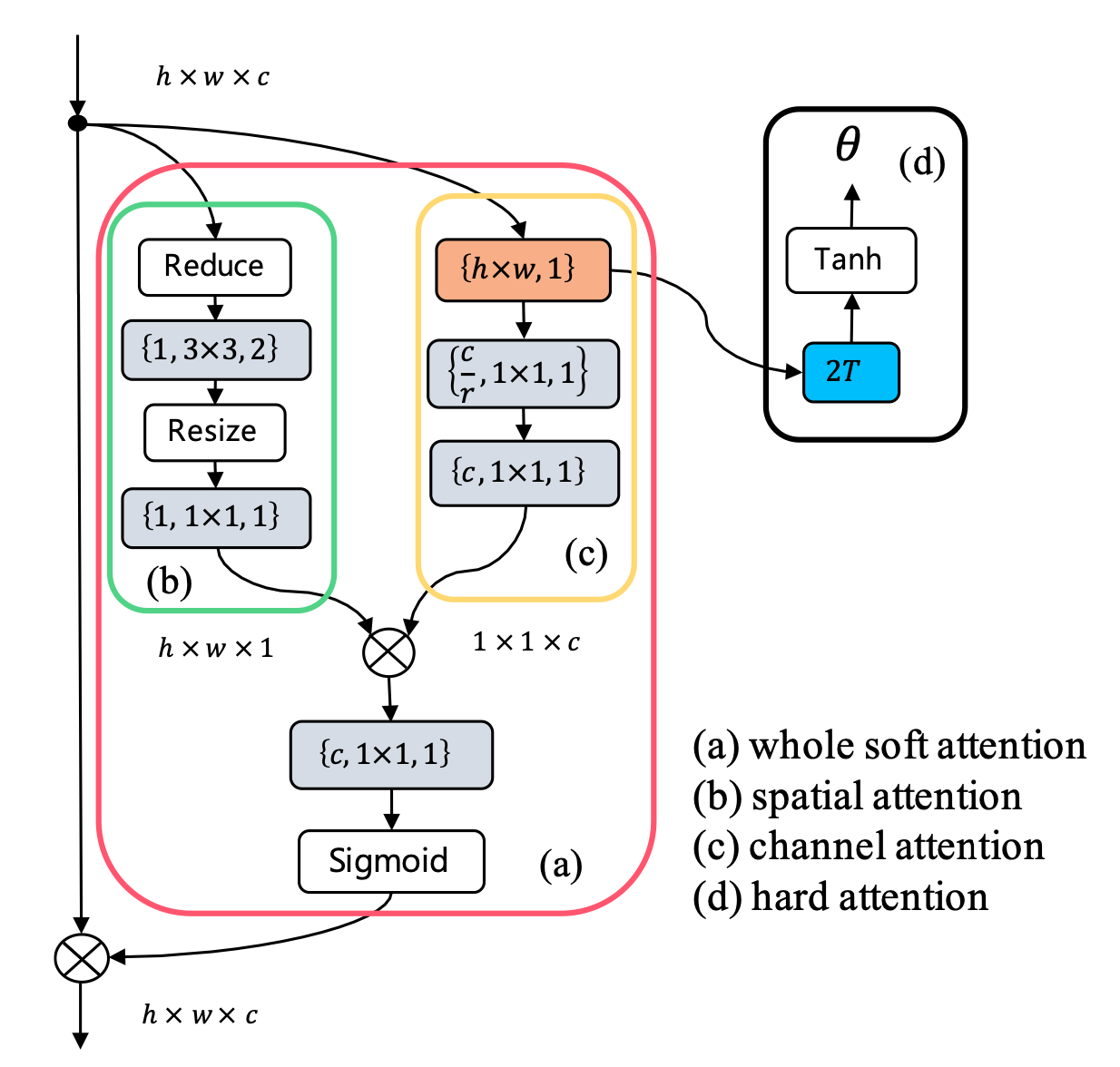

Harmonic Visual Attention Deep Learning

In this project, we show the advantages of jointly learning attention selection and feature representation in a Convolutional Neural Network (CNN) by maximising the complementary information of different levels of visual attention. Specifically, we formulate a novel Harmonic Attention CNN model for joint learning of soft pixel attention and hard regional attention along with simultaneous optimisation of feature representations.

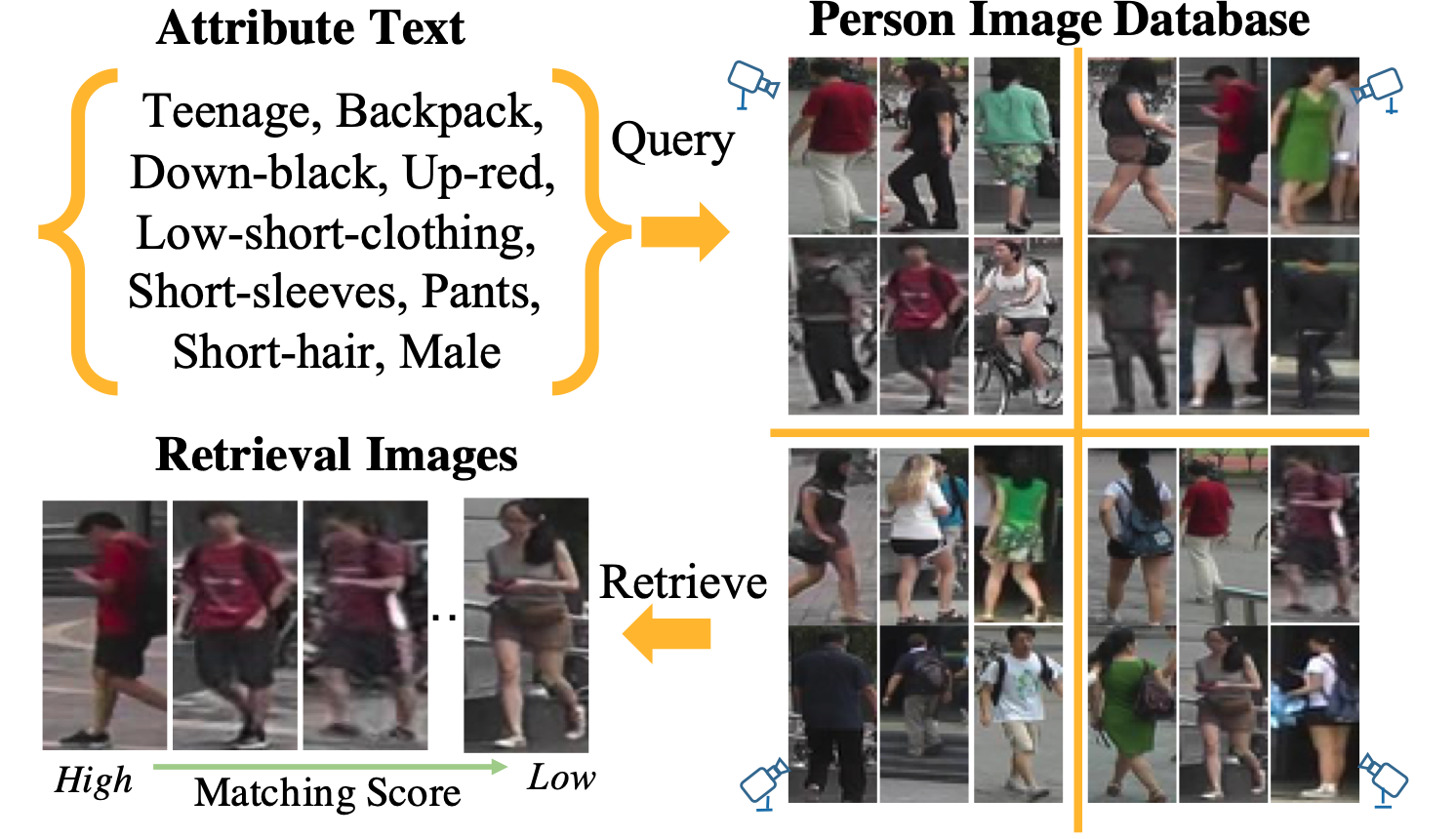

Deep Learning for Large-Scale Video Semantic Search

The amount of video data of public urban space is growing exponentially from 24/7 operating urban camera infrastructures, online social media sources, self-driving cars, and smart city intelligent transportation systems. The scale and diversity of these video data make it very difficult to filter and extract useful critical information in a timely manner. This project develops algorithms and software for semi-supervised and unsupervised deep learning for domain transfer object search, attribute-based latent semantic embedding space inference, and deep learning knowledge distillation.

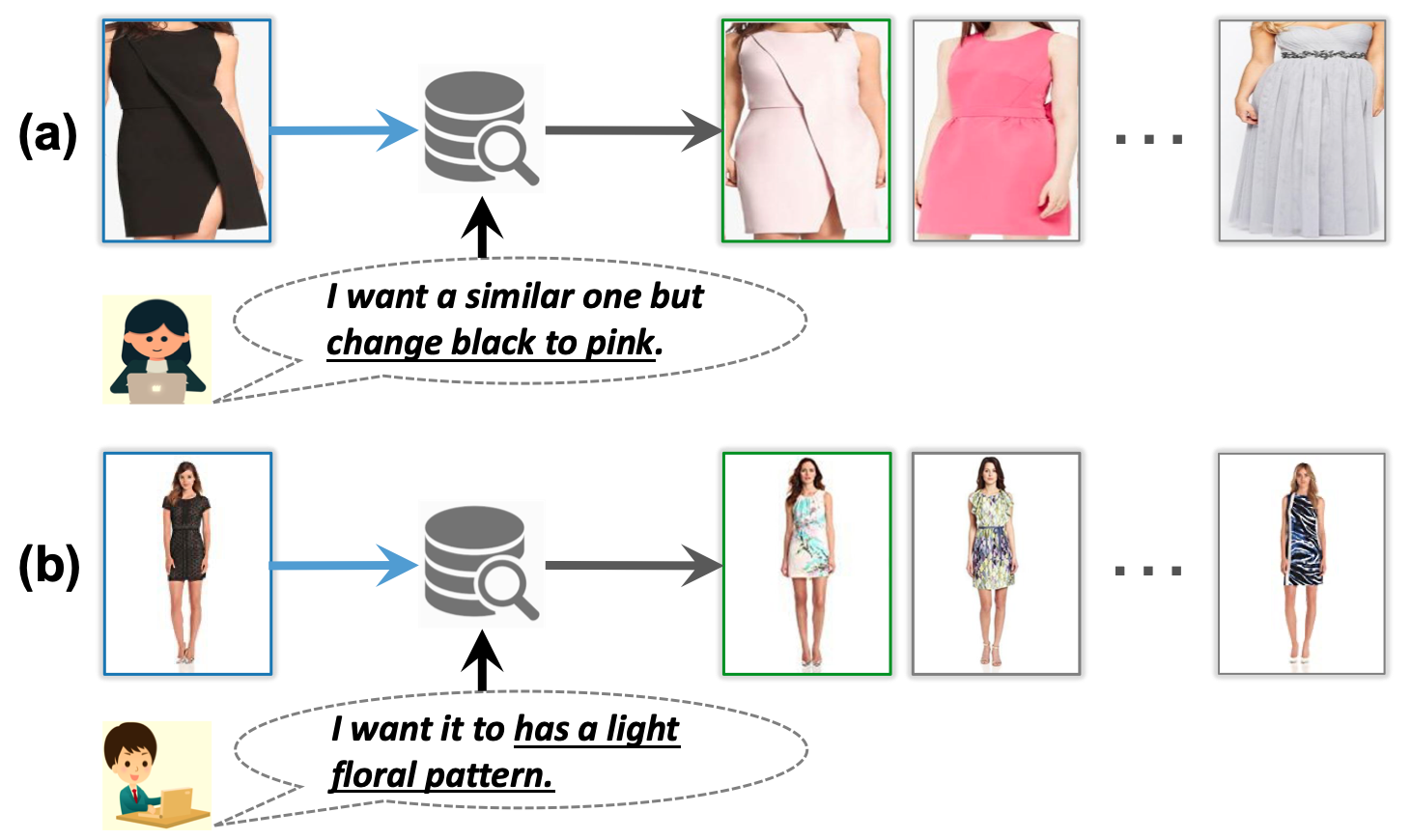

Vision and Language Attention Learning

Image search with text feedback has promising impacts in various real-world applications, such as e-commerce and internet search. Given a reference image and text feedback from user, the goal is to retrieve images that not only resemble the input image, but also change certain aspects in accordance with the given text. This is a challenging task as it requires the synergistic understanding of both image and text. In this project, we aim to solve this problem using the Vision and Language Attention Learning.

Developing and Commercialising Intelligent Video Analytics Solutions for Public Safety

Effective automatic intelligent video analysis of large scale data from public spaces is an important tool in the fight against crime and for safeguarding public safety. It is challenging to extract critical information from very large scale unstructured surveillance videos with a very high ratio of mundane data subject to severe visual ambiguity and clutters. This project develops video analysis systems for abnormal event detection with joint person and vehicle search in unconstrained public spaces.

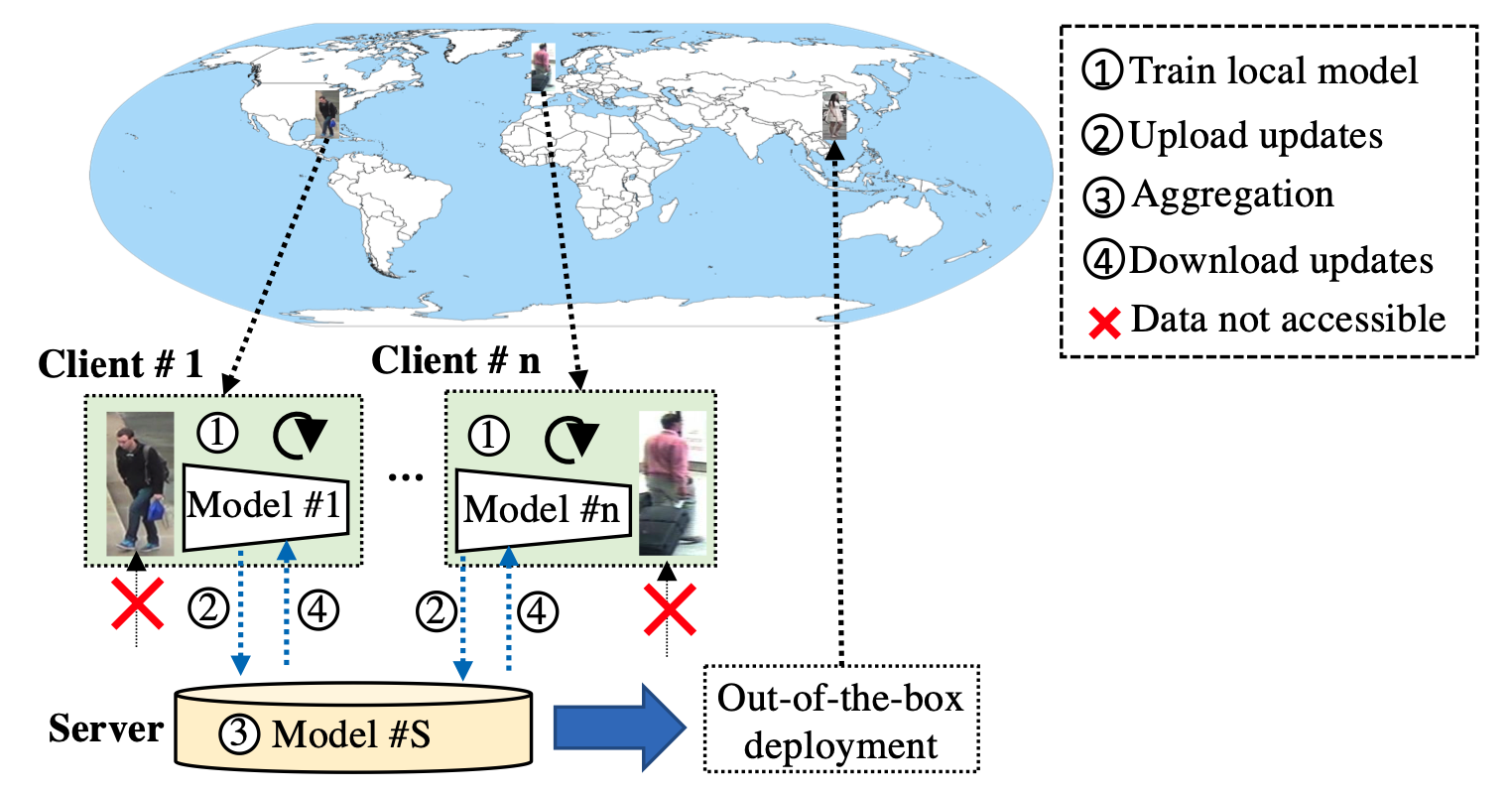

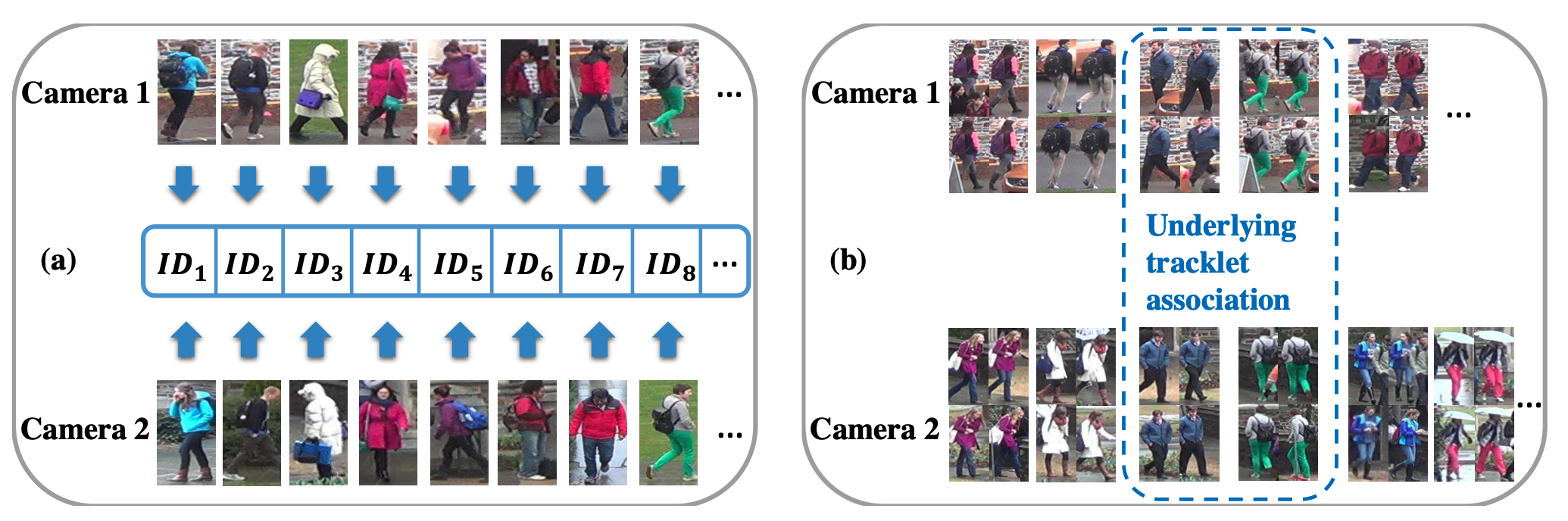

Person Re-Identification In-The-Wild

Person re-identification (RE-ID) aims to re-identify a target person in a new view after the person disappears from another view in a large public space covered by multiple disjoint cameras. The Re-id technology is a key component for tracking people and understanding their activities in a large camera networks. Contemporary RE-ID methods assume that person images are well aligned which is unrealistic due to: 1) Occlusion when only part of a person can be observed; 2) Dramatic non-rigid view changes across different disjoint views. This project develops (1) a robust selective patch-patch metric to measure the similarity between person images under occlusion, e.g. partial person images, (2) a body-part-sensitive metric to learn part-wise nonlinear transform between two images under dramatic view change, and (3) a collaborative framework that uses hand-crafted features to regularise the learned features in a deep neural network for extracting discriminant temporal information to overcome the serious occlusion and view changes.

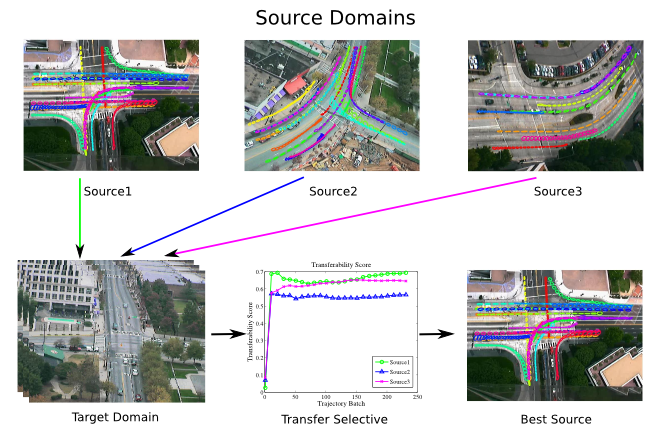

Cross-Domain Behaviour Understanding

Cross-Domain Behaviour Understanding

Current behaviour understanding approaches suffer from highly contraint on the uniform of behaviour distribution, feature representation and etc between training and testing data. These approaches might fail when the testing data is changing all the time (e.g. UAV surveillance). We developed a cross-domain traffic scene understanding framework to interpret unseen events in target domain without training procedure by transferring knowledge learned from existing source domains.

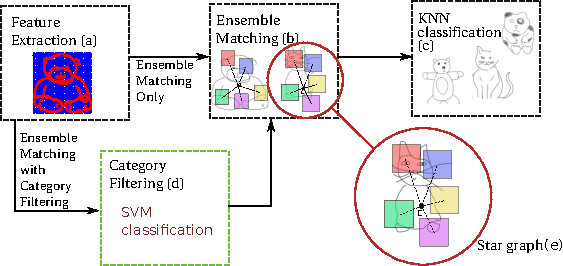

Sketch Recognition by Ensemble Matching of Structured Features

Sketch Recognition by Ensemble Matching of Structured Features

We present a method for the representation and matching of sketches by exploiting not only local features but also global structures of sketches, through a star graph based ensemble matching strategy. We further show that by encapsulating holistic structure matching and learned bag-of-features models into a single framework, notable recognition performance improvement over the state-of-the-art can be observed.

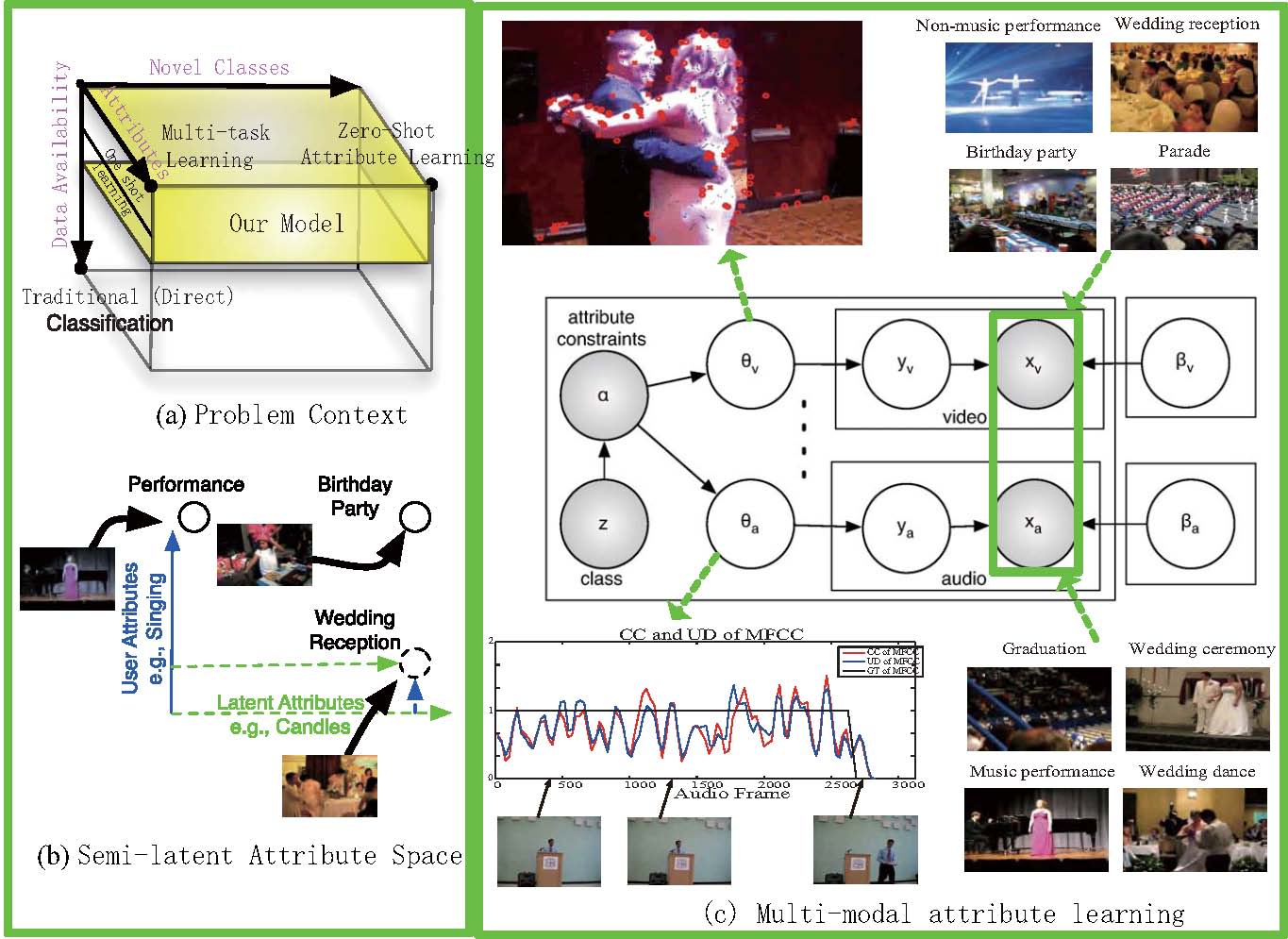

Attribute Learning for Understanding Unstructured Social Activity

Attribute Learning for Understanding Unstructured Social Activity

The USAA dataset includes 8 different semantic class videos which are home videos of social occassions such e birthday party, graduation party,music performance, non-music performance, parade, wedding ceremony, wedding dance and wedding reception which feature activities of group of people.

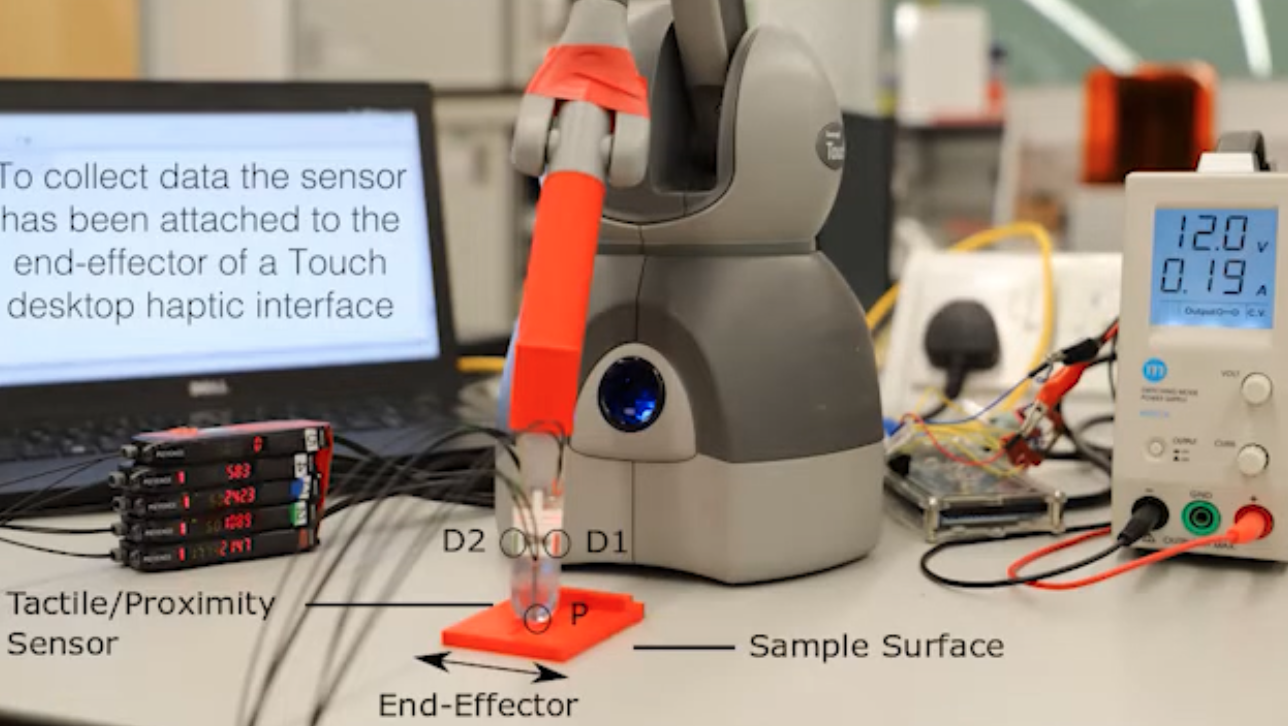

Analysis Of Cluttered & Occluded Scenes From Millimetre Wave RF Data

In this project we are developing a millimetre-wave radar system, which could be used to locate objects and robots in hazardous industrial environments. This approach is complementary to camera-based systems, because radar signals can penetrate steam, smoke, and surface debris, which might make the target optically invisible. The project involves traditional radar signal processing algorithms, as well as new machine learning methods. In particular, we show that optical data can be used to improve the spatial calibration of the radar sensors.

This project is supported by by the EPSRC National Centre for Nuclear Robotics (NCNR), and hosted by the ARQ Centre for Advanced Robotics at QMUL (https://www.robotics.qmul.ac.uk/research/extreme-robotics/).

Recently Completed Projects

Nuclear Centre for Nuclear Robotics (NCNR) (2018-2019) – Vision-based robot navigation for unseen environment. The Engineering and Physical Sciences Research Council (EPSRC), UK

EU FP7 Security Programme Project SUNNY (2014-2018) – Smart UNmanned aerial vehicle sensor Network for detection of border crossing and illegal entry

EU FP7 Security Programme Project SmartPrevent (2014-2016) – Smart video-surveillance system to detect and prevent local crimes in urban areas

INSIGHT: Video Analysis and Selective Zooming using Semantic Models of Human Presence and Activity (2004-2007): INSIGHT is an EPSRC and MOD DSTL jointly funded three years project under the EPSRC Technologies for Crime Prevention and Detection Programme. INSIGHT aims to advance the state-of-the-art in semantic content analysis of CCTV recordings for automatic semantic video tagging, search and pro-active sampling. Shaogang Gong, Tony Xiang, Melanie Aurnhammer, David Russell, Chris Jia Kui and Andrew Graves

SAMURAI: Suspicious and Abnormal behaviour Monitoring Using a netwoRk of cAmeras for sItuation awareness enhancement: SAMURAI is a collaborative project funded under the European Commission Seventh Framework Programme Theme 10 (Security). The aim of SAMURAI is to develop and integrate an innovative intelligent surveillance system for monitoring people and vehicle activities at both inside and surrounding areas of a critical public infrastructure.

BEWARE: Behaviour based Enhancement of Wide-Area Situational Awareness in a Distributed Network of CCTV Cameras: BEWARE is a project funded by EPSRC and MOD to develop models for video-based people tagging (consistent labelling) and behaviour monitoring across a distributed network of CCTV cameras for the enhancement of global situational awareness in a wide area.

Activity Sensing Technologies for Mobile Users – HUAWEI Technology – 2017-2018 – Leading Research Scientist

Multisource Audio-Visual Production from User-Generated Content – EPSRC – 2013-2016 – Leading Research Scientist

Blind Source Separation in Highly Reverberant Environments – Alexander von Humboldt Foundation Germany – 2011-13 – PI